The Autonomous Weapons Arms Race

The Autonomous Weapons Arms Race

Warfare in the near future will be increasingly fought between autonomous weapons systems. What are the implications?

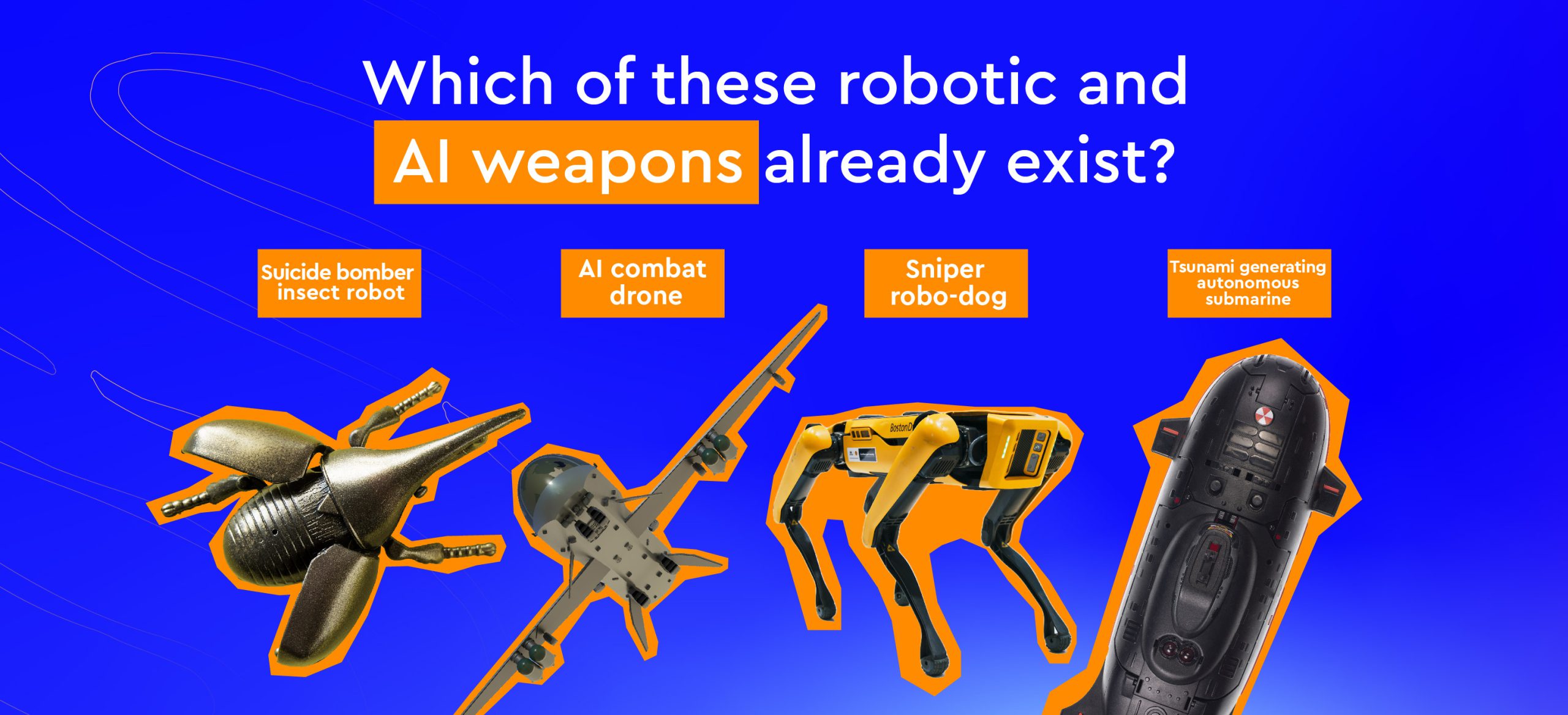

For thousands of years human lives have been lost in battles, but it seems the battlegrounds of the future may not include flesh and blood soldiers at all. Warfare in the near future will be increasingly fought between autonomous weapons systems – meaning, artificial intelligent weapons that can select targets on their own and decide how, when and where to harm them.

Proponents of these weapons say it takes emotions out of the equation and allows for more precise targeting. However, who will be held responsible for mistakes made by robots, and how can machines be taught about ethics? Can we be sure these “killer robots” will distinguish between armed soldiers and civilians? And if fewer soldiers are killed or injured, perhaps politicians won’t be under so much pressure to end wars, so robot-wars will just go on and on?

The UN Secretary-General called these technologies “morally repugnant and politically unacceptable”, but the UN has so far failed to agree on banning them. The dangers of the AI arms race are only just coming to light, but they are bound to change geo-politics forever.

Group Activity

- Divide the group into several teams.

- Pick one of the questions from the list below and let each team discuss it and then present their thoughts to the group; Alternatively, assign each team with a different question, and when they present it to the group ask the remaining teams for their thoughts as well.

Questions

- What could be the consequences of allowing weapon systems to decide when, where and how to operate?

- How would you program a robot to know the difference between civilian and military targets?

- Are there ways in which robots could be better at making decisions in war than humans?

- If more and more robots are used in wars, do you think wars would be more or less likely to happen? Why?

- How do you think a soldier might feel knowing they were fighting robots instead of people?

- Do you think it’s possible to have a world where robots do all the fighting and no humans get hurt? Would that be a good or bad thing?

- If a robot commits war crimes, who should be held accountable?To delve deeper, give each team an article from the list below (you can assign all of the articles or choose between them). Ask each team to summarize the article’s main argument(s) and present it to the group. Things to note and address: where was the article published (a magazine? A news website? An academic journal?) Who is the author (a columnist? An academic?). Each team can also turn the article’s core argument into a slogan (“AI deletes the I”, “The solution is evolution”, etc.). Ask each team to present their thoughts on the question to the group.

-

- Article 1: UN fails to agree on ‘killer robot’ ban as nations pour billions into autonomous weapons research. The Conversation, December 2021

- Article 2: Autonomous Weapons Are Here, but the World Isn’t Ready for Them. Wired, December, 2021

- Article 3: The world can halt an arms race over killer robots. Why is the Biden administration standing in the way? The Washington Post, December 2021